You all probably heard about Meltdown and Spectre vulnerabilities that are exploiting bugs in the modern CPU architecture. The biggest problem with these vulnerabilities is that they are hardware bugs, so they cannot easily be fixed. This means the fixes need to be done in the OS kernel itself (for each OS individually). These flaws exist in the first place because of CPU performance optimizations and because mitigations are fixed on the software level, which means that we should expect some performance penalties.

At the time these vulnerabilities were released to the public, we were in the process of testing some new SSDs. That’s why we used that server to test how these mitigations would impact the performance of our most used database, Postgres. Before we go into the results, we have to explain three mitigation features that were added to the Linux kernel:

PTI– is the Meltdown mitigationIBRS– Spectre v1 mitigationIBPB– Spectre v2 mitigation

All the news was saying that PTI should have the biggest performance impact, while the other two should have a minor effect. Also, was said that if the CPU supports PCID (Process-Context Identifier) and INVPCID (Invalidate Process-Context Identifier), these mitigations should have a smaller impact on performance. Our CPU does support both of these features.

Benchmark Server

- CPU: 2x Intel Xeon E5-2630 v4 (20 cores total)

- RAM: 29GB limited with mem=32G on boot

- DISK: RAID10 4x SM863a 1.9TB (Dell H730p controller)

- OS: CentOS 7.4.1708

- Kernel: kernel-3.10.0-693.11.6.el7.x86_64 (microcode_ctl-2.1-22.2, linux-firmware-20170606-57.gitc990aae)

- DB: PostgreSQL 9.5.10 (postgresql.conf used for these benchmarks)

- FS: XFS (nobarrier, noatime)

Benchmark

Tests were done using pgbench with two different sizes of the database (different scale parameter -s): 9000 (DB of size ~140GB) and 1000 (DB of size ~15GB). The reason for this is that we wanted to test two different scenarios:

- DB cannot fit into the OS page cache, so there would be a lot more reading directly from the SSDs, and

- the whole DB can fit into the page cache, which means all reading would be done from RAM.

Three different tests were done (write, read/write and read) and they were done with the following script. As we can see from the script before every test, we VACUUM FULL the DB, drop all caches and restart Postgres so that we have a clean state every time. Each pgbench benchmark was run for 1 hour.

We first ran this script with an older kernel 3.10.0-693.el7.x86_64, which doesn’t have these mitigation patches as a reference. Then we used the kernel that has all the mitigations (3.10.0-693.11.6.el7.x86_64) but first, we disabled them all, then enabled them one by one and finally tested with all of them enabled. CentOS 7 allows you to easily enable/disable these mitigations by writing 1 (to enable) 0 (to disable) into specific debugfs files. For example, to disable all mitigations we would use:

echo 0 > /sys/kernel/debug/x86/pti_enabled

echo 0 > /sys/kernel/debug/x86/ibpb_enabled

echo 0 > /sys/kernel/debug/x86/ibrs_enabled

The names speak for themselves as to which ones would be enabled/disabled.

To mitigate Spectre, besides kernel updates, we also needed to apply Intel’s microcode and firmware upgrades. Problem was that these microcode/firmware upgrades caused random system reboots, so Red Hat decided to revert back those updates in their packages. This means that the latest versions of CentOS/RHEL are still vulnerable to Spectre and it will probably stay that way until Intel releases good microcode updates. To be able to test Spectre mitigations, we needed to downgrade microcode_ctl and linux-firmware packages to versions:

- microcode_ctl-2.1-22.2

- linux-firmware-20170606-57.gitc990aae

We also didn’t notice any reboot issues or any other issues with them, but it’s still probably safe to keep the latest versions on production servers.

Results

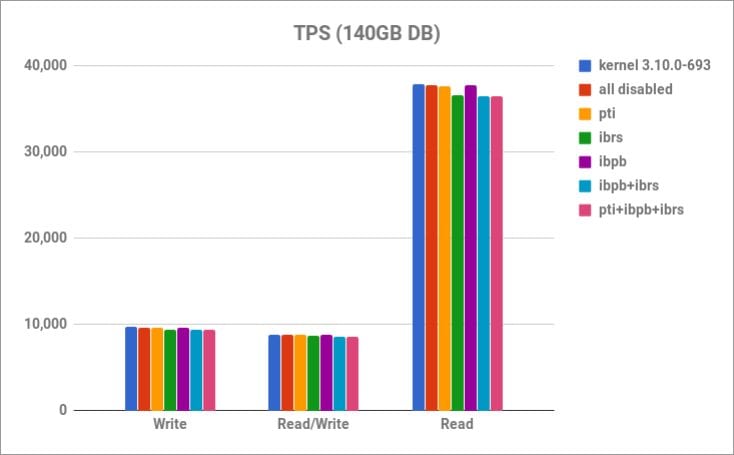

Here are the results for the 140GB DB in the number of transactions per second (TPS) (higher is better):

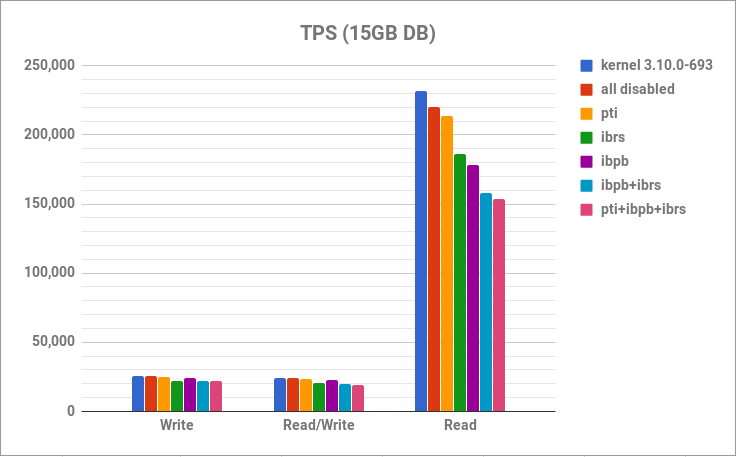

As you can see, there isn’t much of a difference in the write and read/write tests, while in the read test it looks like IBRS has the biggest performance impact, but it’s still small. If we run the same tests on a 15GB DB, things look different:

Yet again, in the write and read/write tests, there isn’t much of a difference, but in the read only test the impact is noticeable. Even with all three mitigations disabled, there is a drop of about 5%. With PTI enabled, we lose 8%, with IBRS 20%, with IBPB 23%, while with all three enabled, we lose a total of 34% compared to kernel 3.10.0-693.el7.x86_64, which is huge.

It is important to mention that this is a benchmark and not a real world workload. But if you have a small DB that fits into your RAM with a mostly read workload, you will probably be impacted the most. In all other use cases, you shouldn’t notice that much of a difference, but of course, it’s best that you test with your app workloads. What was also interesting is that all the news was saying that PTI will have the biggest performance impact, but these results proved otherwise: that Spectre mitigations are a bigger performance problem. It would also be great if these tests were run on a CPU without PCID and INVPCID support, to see what the impact would be.