One of the most important things about Continuous Integration (CI) builds is that they work the same, all the time. What is even more important is that they only succeed if everything is completed successfully. TeamCity (TC), like many CI tools, is very powerful and flexible. Because of that it's relatively easy to make mistakes, so your builds are not consistent, nor repeatable. This could lead to a variation of problems. Here are a few short examples of how to make TC builds tighter, and repeatable.

Beware of the Command Line Build Step

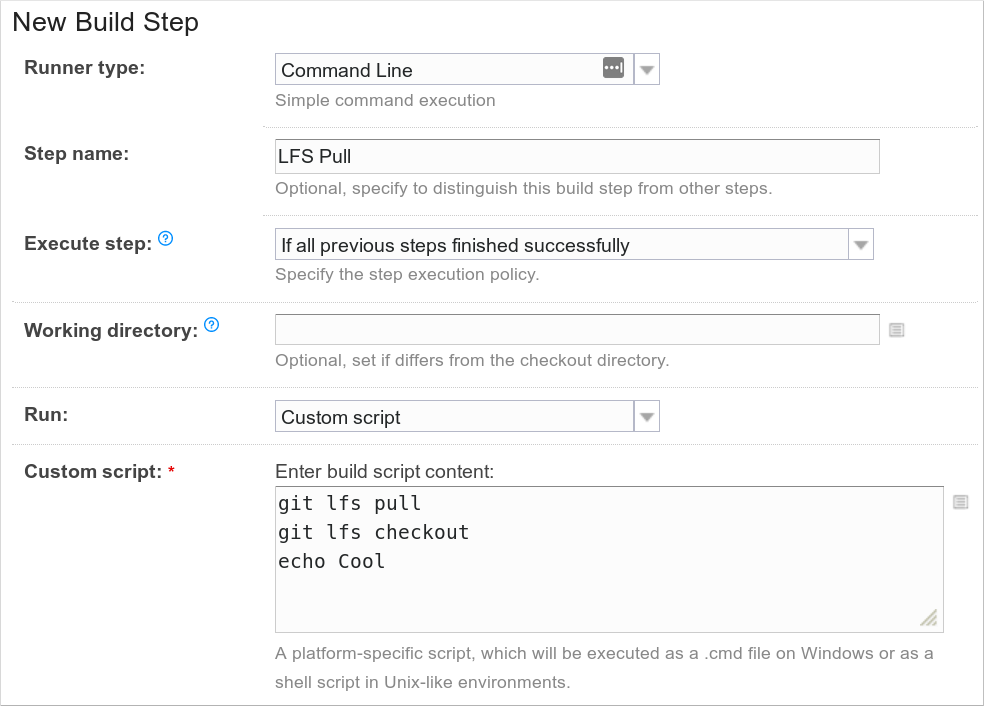

Command Line build step (especially the Custom script type) is the most powerful step, because it gives you the most freedom. It is probably one of the most used steps in TC, but it's also the easiest to make a mistake with. The main thing to be aware of, is that Command Line doesn't always work as you may expect it to. For example, check this build step:

This step runs three commands, and you would expect that if any command failed, then the whole step would also fail. But that isn't the case. It will actually fail only if the last command fails, and because that command is echo, that will likely never happen. Because of this, the above two git lfs commands can fail and TC will still continue like everything is ok. This means that you don't have the correct source files to work with and who knows what will the outcome of the build be.

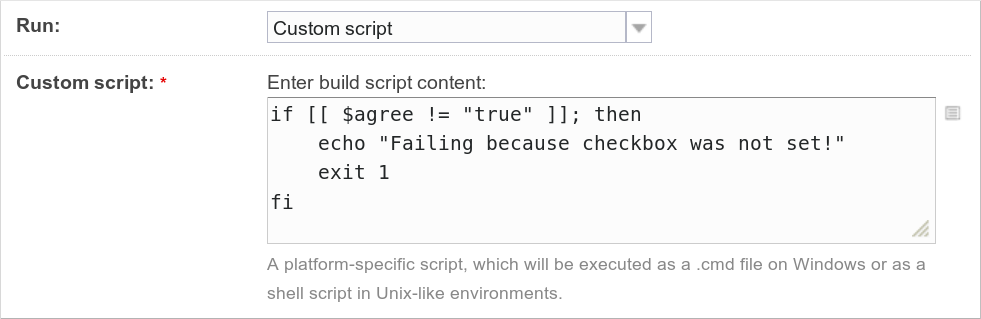

But that is not the only thing to be aware of. In the example above we only used regular commands, but what if we wrote a shell snippet like this:

The snippet will use the default shell on the TC agent, on which the build is running. And because shells can be different, on different agents, dependent on the configuration, distribution, operating system... then this script could work differently. In this example double brackets [[ ]] are a bash specific, and are not POSIX compatible, which means they don't have to work in other shells.

Fixing Command Line

To resolve both of these issues, it's best to always start your scripts with the shell shebang of the shell you would like to use. For bash we should start our scripts with:

#!/bin/bash

This will make sure that it always uses bash.

To resolve the first issue of commands failing, but the build step not, we have two options. Usually the easiest solution is just to add set -e on top of the script:

#!/bin/bash

set -e

This tells Bash to stop the execution and exit the script if any of the commands fail. Meaning the whole script will exit with the failure status of the command that fails, therefore stopping the execution of the TC build.

The only way theCommand Linemodule knows if a command failed is by checking the exit code of the script.0means success, everything else is failure.

There is an alternative way to resolve this without using set -e, and I would only recommend if you have just a few commands. You can separate commands with && which basically means "run the next command only if the previous one succeeded". For example:

#!/bin/bash

git lfs pull && git lfs checkout

# or in separate lines

git lfs pull && \

git lfs checkout

I would also like to note that you shouldn't need to use theechocommand (or any command) to print something into the build log, except if you have a really good reason for it. So in the first example,echo Coolcommand is totally useless and just pollutes the build log. You won't need to check the build log if the commands finished successfully because TC will detect that for you by failing the build.

Run Builds Inside Docker Containers

We have solved the problem of builds running different shells, or builds not failing when they should, but why not take it even further. What happens if different TC agents have different software and versions of software installed, how do you know that the build will be exactly the same on all TC agents? You can solve that by automating the provisioning of all TC agents (which we did). However, it is still possible that some users with their builds, install some software on the agents themselves during (e.g. pip install --user somepkg). And it's hard to control that. The easiest solution is to always have clean build environments, and it's fairly easy to solve by running your builds inside Docker containers.

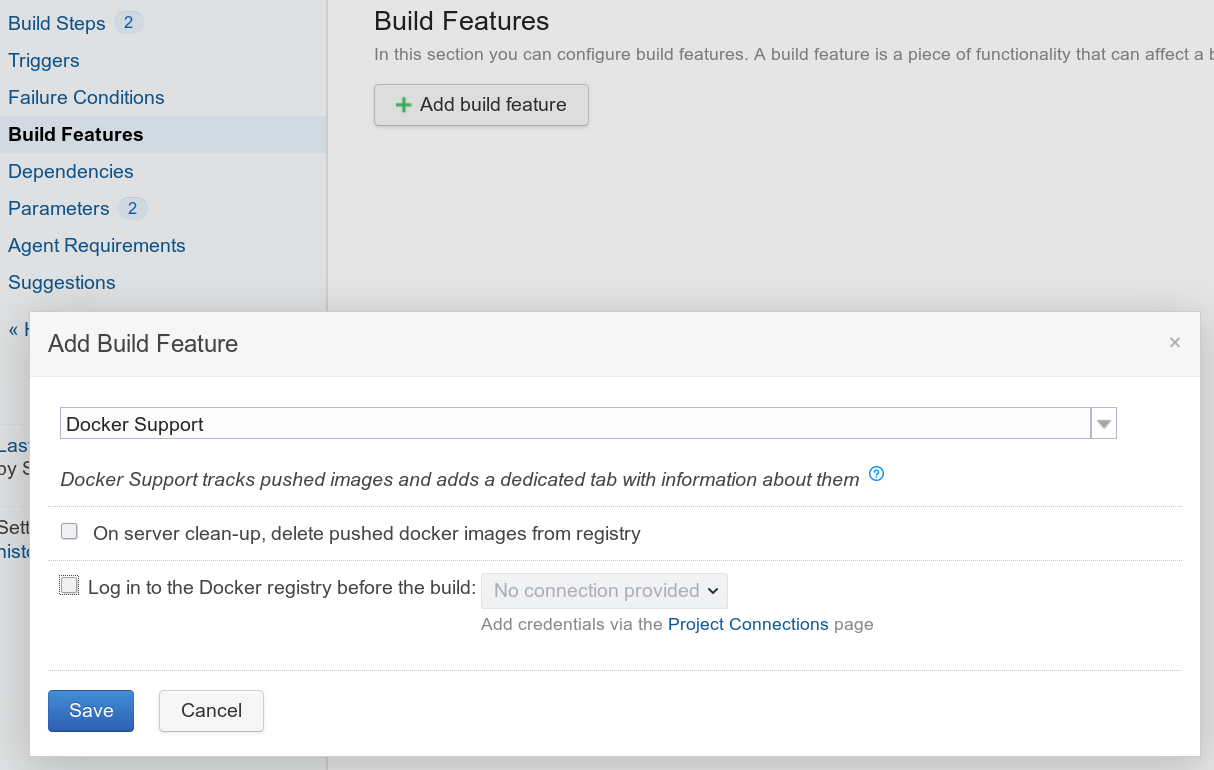

TeamCity supports Docker out of the box, you just have to enable it as a build feature:

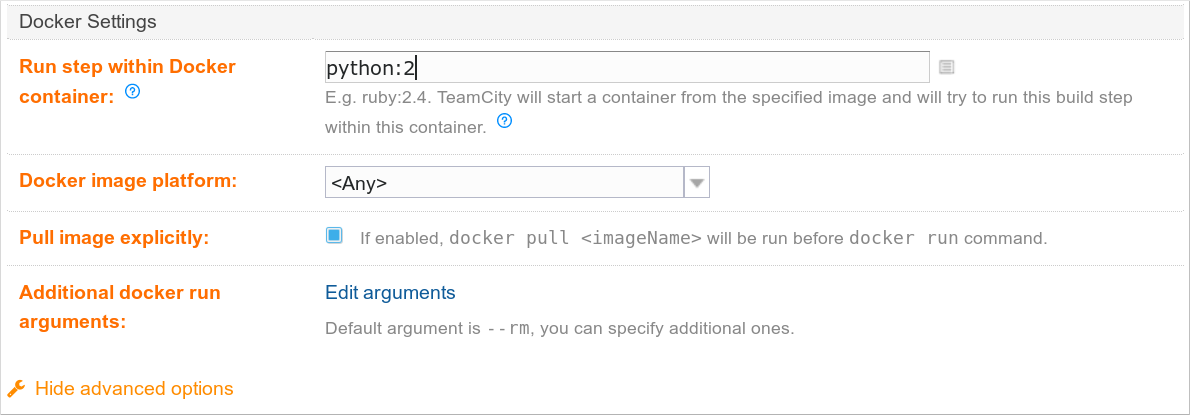

Next, in the Command Line step, select it to run inside a Docker container by specifying an image:

You can use many public images available on https://hub.docker.com/, or build your own. In here we are using Python 2 image. When this build step is run, TC will run the container and mount the whole build directory into the Docker container's working directory. This means that you will always have a clean and identical environment.

Merge Command Line Steps

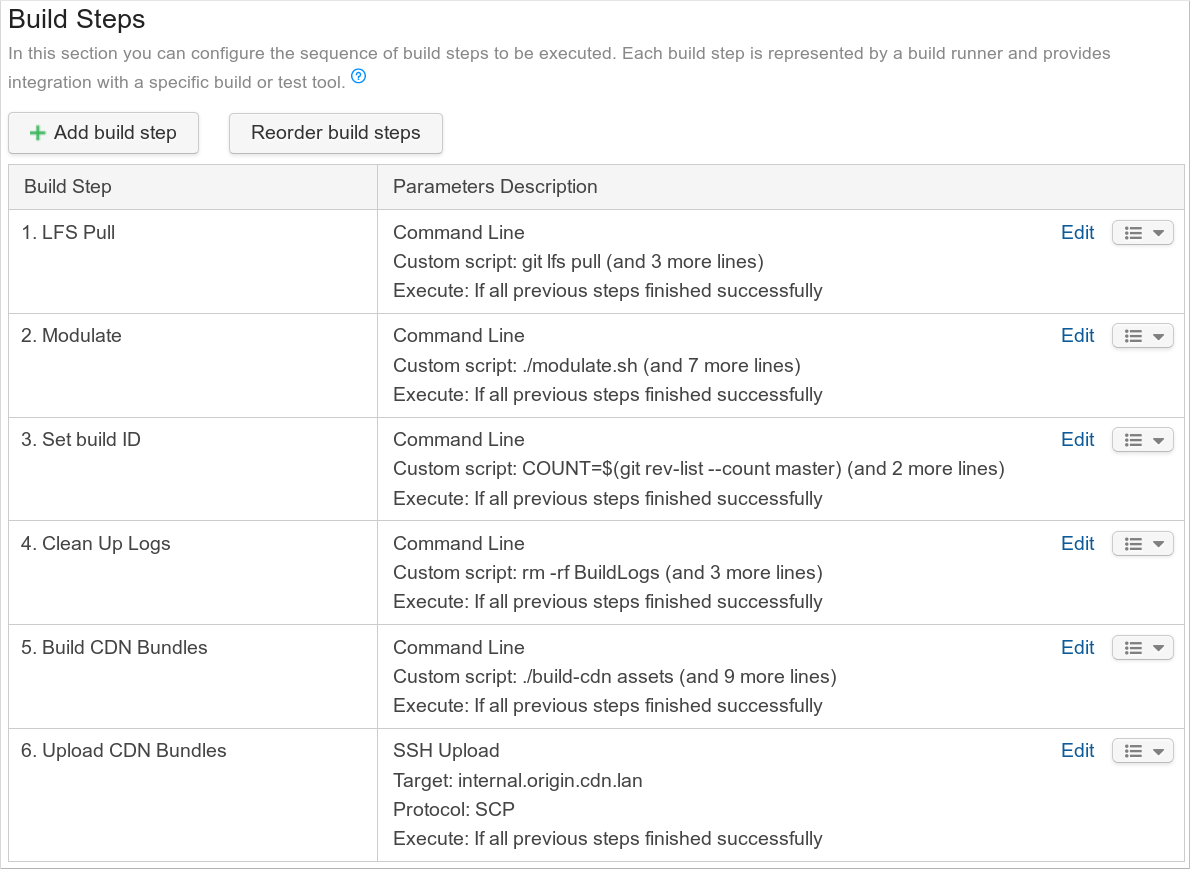

One last, little tip. If you have a lot of Command Line steps one after another, don't separate them all into smaller builds steps, but merge them together into one big step. It will make your life a lot easier to maintain and edit that step later on. But also make sure to check what is actually happening. Look at this example:

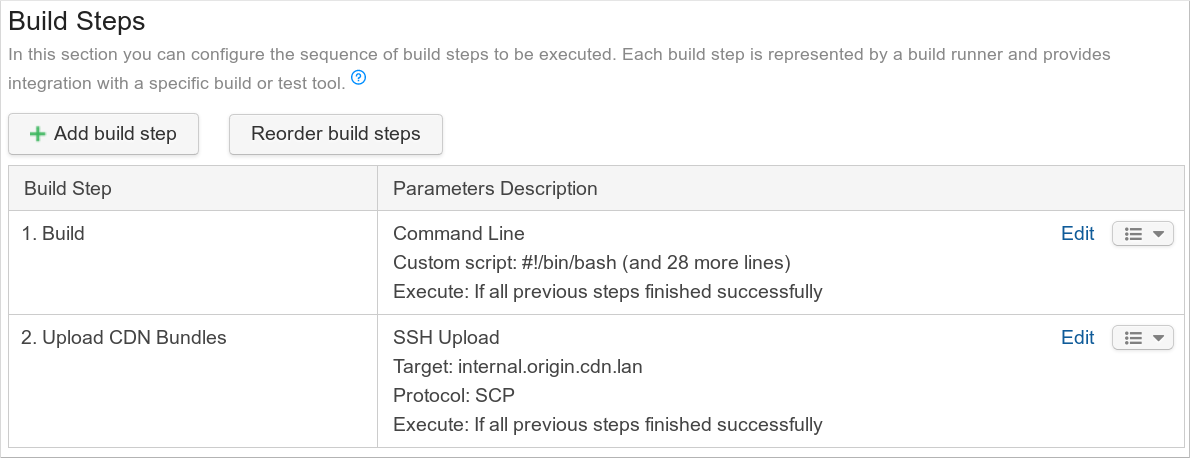

First five steps are all Command Line steps, and they could be all merged together, so should look like this:

Now we have only one build step which is very easy to read and edit. It's simple and easy to maintain. It's also much easier to see what is actually happening when you open this single step, instead of before where you needed to click on each build step to see what each step does.

Of course these tips should be applicable for any CI tool (e.g. Jenkins, which we also use). Did you have problems with your TC builds because of these issues? Do you have some other TeamCity tips? Feel free to share in the comments below.