Key Concepts

The goal of this article is to give a brief overview on how does goal-oriented AI developed for F.E.A.R. work as it stands among the most interesing AI developed for FPS games even today! Lots of materials you will see here are based on GDC 06 talk given by Jeff Orkin. At the bottom of the article - under resources, you can find the original slides and paper.

So let's get into it!

Just how good is the AI from original F.E.A.R.?

The following video is a really nice showcase of how enemy soldiers move, attack, flank and communicate in order to challenge the player.

The F.E.A.R. AI

Developers of game F.E.A.R. from Monolith studio also worked on games Shogo (1998) and No One Lives Forever (2000), No One Lives Forever 2 (2002), Tron 2 (2003). Their AI engine has improved from game to game and culminated in their game F.E.A.R. (2005).

This is their quote for what they wanted to build for their game F.E.A.R.

We wanted F.E.A.R. to be an over-the-top action movie experience, with combat as intense as multiplayer against a team of experienced humans. A.I. take cover, blind fire, dive through windows, flush out the player with grenades, communicate with teammates, and more.

Their AI is based on finite-state machines (which still remain a very popular framework for driving the AI systems), but FSMs are used in a different way compared to most of others games with the idea of a goal-oriented approach.

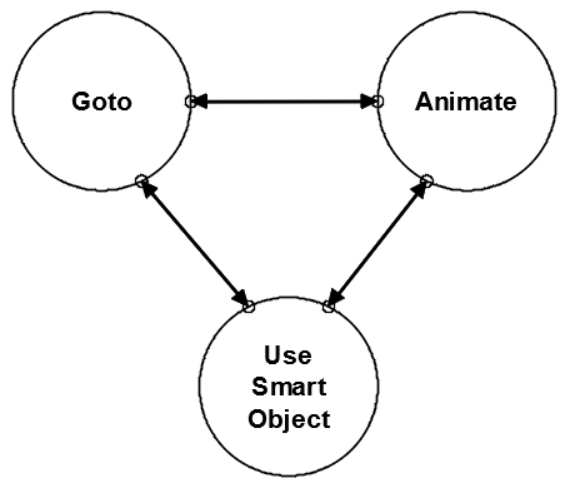

FSM developed for their game has only 3 states:

- Goto

- Animate

- UseSmartObject

Node UseSmartObject is actually a specialization of the Animate node, so it can be said that FSM actually has only two nodes:

Authors also state that when you break it down, at the core, what AI does is just telling where should things move and which animation should they play, which is supported by their FSM shown above.

The core problem is deciding when to switch between these two states and which parameters to set. Where should the A.I. move to, what is the destination, which animation should be played, should animation play once, or in a loop etc.

Often logic that solves the mentioned problems is embedded into FSMs giving rise to more and more complex FSMs. In F.E.A.R., a different approach is taken as logic is moved into a planning system. The idea is to give an AI the knowledge required to make a (good) decision when solving the mentioned problems.

Older games (beginning of 2000s) were perfectly fine with having enemies simply find cover and pop out to shoot at random. But as expectations of players were growing and developers wanted to push things to their limit, there was a need to create more realistic and immersive AI experience. This novel approach taken by F.E.A.R. was motivated by that desire to create a more challenging and realistic AI for their soldiers, but also supported by their findings from working on their previous games.

FSM vs Planning

FSM contains a definition of what should AI do in every situation compared to the state in which the AI is. A planning system tells the AI what the goals and actions are, and lets the AI decide the sequence of actions to satisfy goals.

Planning AI system used in F.E.A.R. is inspired by the STRIPS [1] planning system from academia.

| FSM | Planning |

|---|---|

| How | What |

| Procedural | Declarative |

STRIPS planning

Planning is a formalized process of searching for a sequence of actions to satisfy a goal. This process is also called a plan formulation.

STRIPS (STanford Research Institute Problem Solver) is a method developed at Stanford University in 1970 with the idea of creating a formalized system for plan formulation and goal satisfaction. STRIPS consists of goals and actions. Goals describe some desired state of the world we want to reach, and actions are defined in terms of preconditions and effects. Action may execute only if all of its preconditions are met, and each action changes the state of the world in some way.

States can be represented as either logical sentences or vectors.

Logical sentence: AtLocation(Home) Λ Wearing(Tie)

Vector: (AtLocation, Wearing) = (Home, Tie)

Here is an example given in the GDC paper by the authors themself. Alma is a character from the game.

Now, let’s look at an example of how the STRIPS planning process works. Let’s say that Alma is hungry. Alma could call Domino’s and order a pizza, but only if she has the phone number for Domino’s. Pizza is not her only option, however; she could also bake a pie, but only she has a recipe. So, Alma’s goal is to get to a state of the world where she is not hungry. She has two actions she can take to satisfy that goal: calling Domino’s or baking a pie. If she is currently in a state of the world where she has the phone number for Domino’s, then she can formulate the plan of calling Domino’s to satisfy her hunger. Alternatively, if she is in the state of the world where she has a recipe, she can bake a pie. If Alma is in the fortunate situation where she has both a phone number and a recipe, either plan is valid to satisfy the goal. We’ll talk later about ways to force the planner to prefer one plan over another. If Alma has neither a phone number nor a recipe, she is out of luck; there is no possible plan that can be formulated to satisfy her hunger.

STRIPS action is defined by its preconditions and effects. Preconditions are described in terms of the state of the world, and the effects are described with a list of modifications to the state of the world.

Changes to the world (in original paper) are done with Add List and Delete List operators. The reason for that is because STRIPS is based on formal logic which allows for a variable to have multiple values, or to be more precise, it allows for a variable to be unified with multiple values. This is also desirable in some situations - for example when buying items as we can have multiple items in our possession. So when a value of a variable is changed, we can first execute the operations of deleting values from the list (remove the values we want to change) and then add values to the list (new values).

Let’s continue with examples from the paper. Paxton is also a character from the game.

Back to our original example, there are two possible plans for feeding Alma. But what ifinstead we are planning for the cannibal Paxton Fettel? Neither of these plans that satisfiedAlma’s hunger will satisfy someone who only eats human flesh! We need a new EatHumanaction to satisfy Fettel. Now we have three possible plans to satisfy hunger, but only two aresuitable for Alma, and one is suitable for Paxton Fettel.

This is basically what F.E.A.R. does. Instead of planning on how to satisfy hunger, enemies plan on how to eliminate threats. For example, this can be done by firing a gun at the target (assuming bullets are available), or by using a melee attack (assuming enemy is close enough), etc.

Planning in F.E.A.R.

Monolith games was driven by philosophy that it’s the level/game designer’s job to design an environment that is full of interesting spaces, items, flanking positions, glass windows, tables, and that created AI is supposed to be created in a way that allows for these goodies to be exploited in a fun and realistic manner.

For this they developed internal tooling which accelerates the work on game features (more about this in the resources part of this article). An agent in their game space needs to have a set of goals that AI will try to fulfill depending on the state of the world.

Let’s assume that there are two goals: Patrol and Kill Enemy, and three entities spawned with these goals - Soldier, Assassins, and Rat. Soldier will execute a basic patrol logic, the assassin will move around and patrol cloaked with occasional hiding on the walls. Rat will move around like a soldier (not in sense of animations of course). When any of them sport a player, they will execute their behavior for Kill Enemy, but this behavior will differ. Soldier will shoot, assassin will jump down from the wall and execute a melee attack and rat will fail to formulate any sort of a plan to attack the player. Obviously it doesn't make sense to have a rat with the goal of killing an enemy, it is just used in this example to show that an agent can fail to formulate a plan to execute.

Benefits of Planning:

- Decoupling Goals and Actions

- Layering Behaviors

- Dynamic problem solving

Decoupling Goals and Actions

Originally Monolith games had problems caused by embedding logic into FSMs (No One Lives Forever 2) which caused them lots of problems when they wanted to modify some behavior for some actors, especially late during game development. For example, if there was an out of shape policeman and a regular policemen, their logic would be very similar, but out of shape policemen would have to stop after some time period to catch his breath when he was running. This would require the change of an already complex FSM.

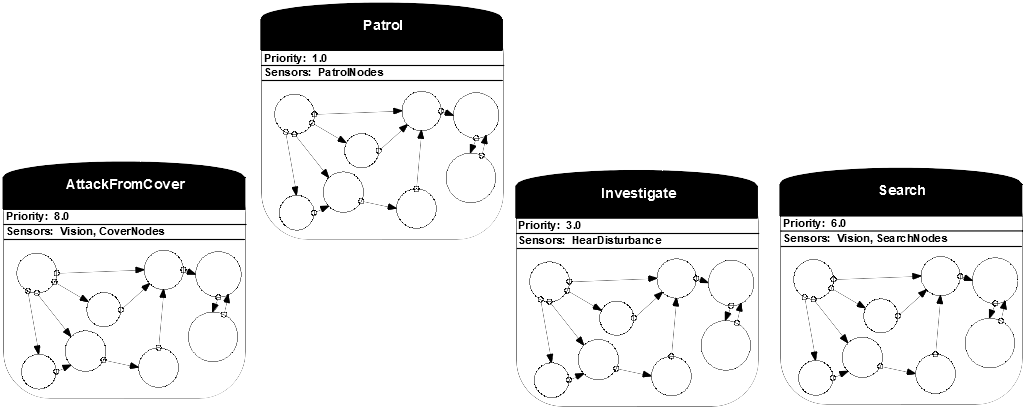

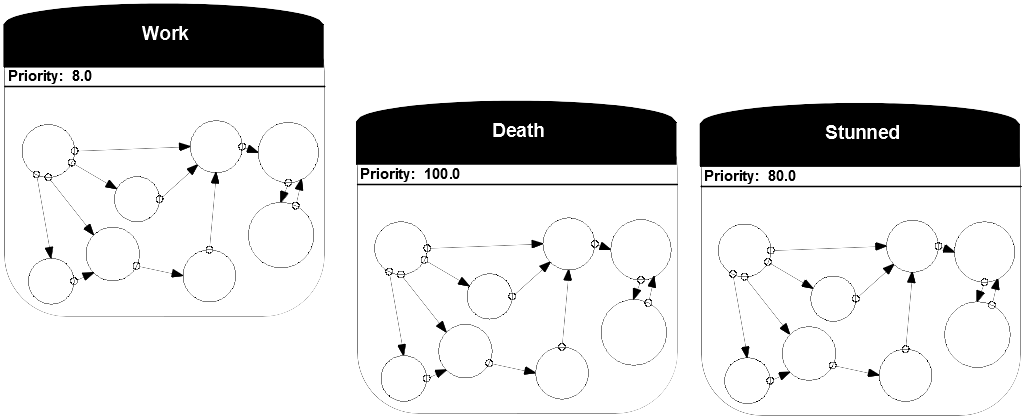

The following diagrams show No One Lives Forever 2 FSMs.

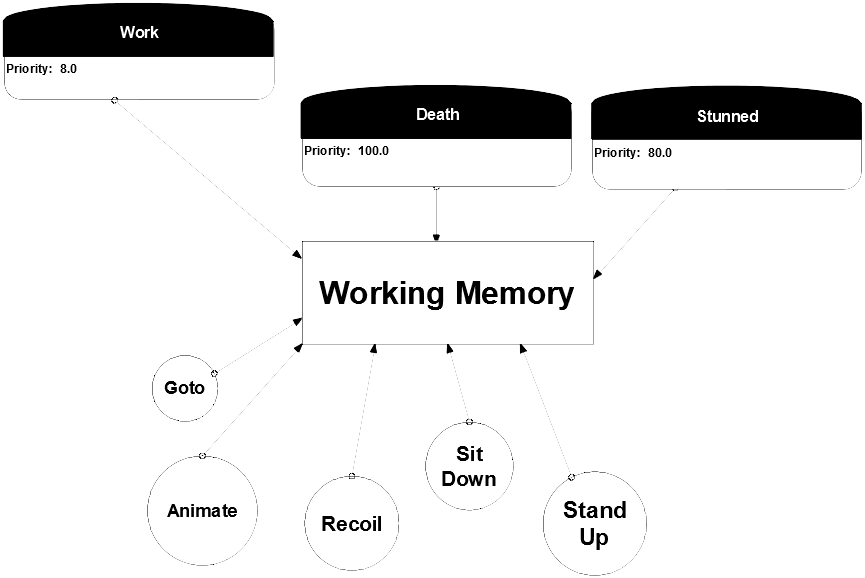

This illustrates the need to decouple goals and actions. When this decoupling is done, a concept of working memory is introduced which allows memory sharing that can be used to access the state of the environment. For example, if an actor is sitting on the desk, and he is shot, we want to play a death animation on him directly, rather then making him stop doing what he does, standing up and then playing death animation. This information can be accessed through the working memory.

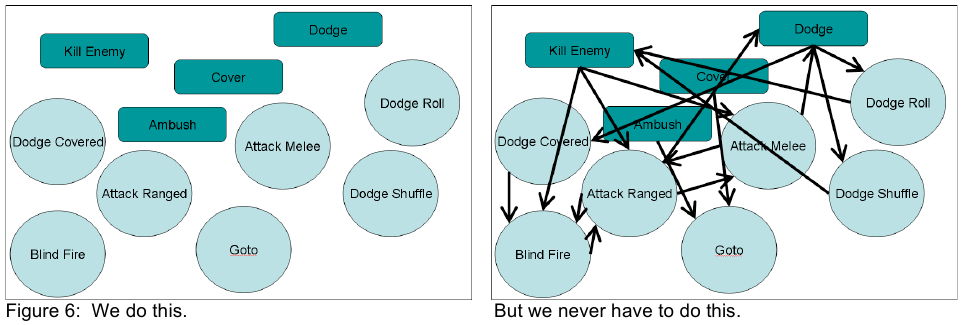

This diagram shows the decoupled goals and actions in F.E.A.R.

Layering Behaviors

The planning approach has another benefit which is that behaviors can be layered. For example, basic soldier combat behavior consists of 7 layers. It is nicely summarized by the authors themselves (I hope you are not hungry):

We start our seven layer dip with the basics; the beans. A.I. fire their weapons when they detect the player. They can accomplish this with the KillEnemy goal, which they satisfy with the Attack action.

We want A.I. to value their lives, so next we add the guacamole. The A.I. dodges when a gun is aimed at him. We add a goal and two actions. The Dodge goal can be satisfied with either DodgeShuffle or DodgeRoll.

Next we add the sour cream. When the player gets close enough, A.I. use melee attacks instead of wasting bullets. This behavior requires the addition of only one new AttackMelee action. We already have the KillEnemy goal, which AttackMelee satisfies.

If A.I. really value their lives, they won’t just dodge, they’ll take cover. This is the salsa! We add the Cover goal. A.I. get themselves to cover with the GotoNode action, at which point the KillEnemy goal takes priority again. A.I. use the AttackFromCover action to satisfy the KillEnemy goal, while they are positioned at a Cover node. We already handled dodging with the guacamole, but now we would like A.I. to dodge in a way that is context-sensitive to taking cover, so we add another action, DodgeCovered.

Dodging is not always enough, so we add the onions; blind firing. If the A.I. gets shot while incover, he blind fires for a while to make himself harder to hit. This only requires adding oneBlindFireFromCover action.

The cheese is where things really get exciting. We give the A.I. the ability to reposition whenhis current cover position is invalidated. This simply requires adding the Ambush goal. Whenan A.I.’s cover is compromised, he will try to hide at a node designated by designers as anAmbush node.

The final layer is the olives, which really bring out the flavor. For this layer, weadd dialogue that lets the player know what the A.I. is thinking, and allows the A.I. tocommunicate with squad members. We’ll discuss this a little later.

The main idea is that goals and actions can be added, but never requiring to manually specify the transitions between these behaviors. AI can figure out the dependencies at run-time. This is nicely shown with the following diagram:

Dynamic Problem Solving

As mentioned in the previous part of the text, with AI creating a conclusion at run-time, problems are solved dynamically. This means that AI will constantly reassess the situation and try to create new plans. This is very nicely illustrated with the following videos.

Both videos starts in a similar way, a soldier is patrolling. Video 1 has a player standing away from the door, so when the soldier opens the door, he detects the player and starts shooting. Video 2 is different because the player is blocking the door. This causes the soldier to try to open it, fail, then try to kick it, fail, and then jump through the window, detect the player and start executing the melee attack as player is close by. These videos really nicely illustrate the dynamic nature of implemented AI.

Differences from STRIPS

There are changes in F.E.A.R. AI with respect to original STRIPS. These are:

- Cost Per Action

- No Add/Delete Lists

- Procedural Preconditions

- Procedural Effects

Cost per action

Cost per action is introduced in order to add an additional layer of prioritization to the system and force and agent to prefer some actions over others. This is implemented as adding weights to graph edges which also allows later to use A* algorithm to search for a solution.

No Add/Delete Lists

Naturally this was changed due to implementation requirements and features that a programming language offers. Authors state that their world state consists of an array of four-byte values.

Procedural Preconditions

Procedural preconditions are checks done in run-time in order to check for some conditions like does a path exist, is an item available, and similar. This is introduced in order to reduce the burden of encoding too much information about the world state.

Procedural Effects

When an action is executed, it should change the game state, but probably that shouldn’t happen right away. That’s why there is an (already shown) FSM that is updated and used to delay the changes in the game world.

Squad Behaviors and Communication

As F.E.A.R. is an FPS game in which a player needs to defeat enemies, in order to impose a challenge, the player often faces multiple enemies in a level. With that in mind, it makes sense to have enemies form a squad that would attack the player in an organized and coordinated fashion. This is exactly what AI in F.E.A.R. does really nicely.

There is a global coordinator in the game that tries to cluster enemies into squads based on their proximity. These squads can execute a squad behavior (or fail to do so).

There are some behaviors that authors wanted the AI to exhibit:

Simple behaviors

- Suppression fire

- Goto positions

- Follow leader

Complex behaviors

- Flanking

- Coordinated strikes

- Retreats

- Reinforcements

There are 4 simple squad behaviors implemented in the original game:

Get-to-CoverAdvance-CoverOrderly-AdvanceSearch

Get-to-cover makes all squad members who are in invalid cover move into valid cover positions, while one squad member puts suppression fire on the player to protect them.

Advance-cover moves all squad members to a valid cover positions closer to the player, while one squad member puts suppression fire.

Orderly-Advance moves a squad to some position in a single file line, with one member covering the front of the formation, and one covering the back of the formation.

Search splits the squad into pairs who cover each other as they systematically search rooms in an area.

The coordinator for simple squad actions executes the following actions:

- Find participants (to form a squad)

- Send orders

- Monitor progress

- Deduce if it was a success or a fail

Actually, there are no complex behaviors implemented in the original game. Individual and simple squad behaviors, when combined, actually give an illusion of AI doing something complex. Even though this may seem disappointing, it’s actually good that when this AI system is executed in practice, it is able to form (illusions of) complex behaviors by composing existing ones and reacting to the environment. This is also a nice moment to give this famous quote:

Here are the comments from the authors regarding this:

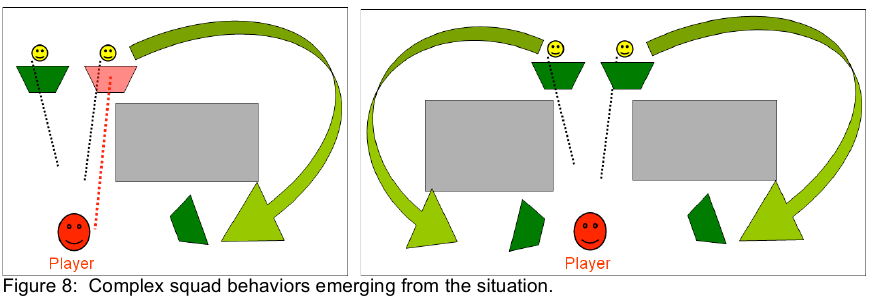

Imagine we have a situation similar to what we saw earlier, where the player has invalidated one of the A.I.’s cover positions, and a squad behavior orders the A.I. to move to the valid cover position. If there is some obstacle in the level, like a solid wall, the A.I. may take a back route and resurface on the player’s side. It appears that the A.I. is flanking, but in fact this is just a side effect of moving to the only available valid cover he is aware of.

In another scenario, maybe the A.I.s’ cover positions are still valid, but there is cover available closer to the player, so the Advance-Cover squad behavior activates and each A.I. moves up to the next available valid cover that is nearer to the threat. If there are walls or obstacles between the A.I. and the player, their route to cover may lead them to come at the player from the sides. It appears as though they are executing some kind of coordinated pincher attack, when really they are just moving to nearer cover that happens to be on either side of the player. Retreats emerge in a similar manner.

This is also illustrated by the following image:

Beyond F.E.A.R. and conclusion

Authors go on to state that audio squad communication they implemented was a good design choice for them as it allowed to further strenghten the illusion of a complex AI behavior. One problem they encountered is that they didn't approach this problem in a systematic fashion, but rather simply invoked audio dialog from multiple points of interest in their C++ codebase. This caused lots of trial and error to get everything right (timing it properly, testing out, connecting responses, avoiding repeating same sentences...). They also go on to state that in the future they would focus on improving this much more and that they consider this a very important piece of the puzzle of illusion of AI.

There are multiple conclusions for us to take:

- Composition of simple behaviors can be used to make the impression of having a complex behavior executed.

- Planning is an interesting approach to design and implement game AI

- Squad behaviors give F.E.A.R. AI an additional level of complexity and quality

- Audio dialog between NPCs reinforce the illusion of complex AI

- Players can’t really properly notice some of the things done by AI

These conclusions touch on the topic of the illusion of intelligence that needs to be shown to players. Great read on these topics can be found in chapters 1 and 4 of Steve Rabin’s Game AI Pro 3. It turns out that as AI designers and developers we are in luck as it isn’t so difficult to trick players into believing that an agent is very intelligent and shows complex behaviors! Maybe a more important thing to accomplish is to make sure that the agent doesn’t exhibit a behavior that a human would deem stupid as this would break the immersion while enjoying the game.

F.E.A.R. definitely deserves to be mentioned as its one of the more unique games that left a mark on the childhood of many gamers, and it was an a nice trip down the memory lane!

Resources

Papers:

- [1] STRIPS: A New Approach to the Application of Theorem Proving to Problem Solving, Richard E. Fikes Nils J. NHsson

- [2] Game AI Planning Analytics: The Case of Three First-Person Shooters, Eric Jacopin

Interesting videos:

- AI and Games: The AI of F.E.A.R. - Goal Oriented Action Planning (2014)

- AI and Games: Building the AI of F.E.A.R. with Goal Oriented Action Planning (2020)

- Example of AI in F.E.A.R.

Original slides and paper from GDC 06: here

Part of F.E.A.R. source code (full AI and overall SDK) can be found here.