Key Concepts

Key concept is creating and evaluating a genetic programming (GP) based algorithm that can be used to automatically generate behavior trees (BTs) for an environment. For example, given a Super Mario game, we may wish to create an agent to be playing the game for multiple reasons like testing level during development, automating unit tests, evaluating level difficulty, providing AI to the game and similar. Requirements for this type of AI is often more on stability and interpretability then on beating the best human player. This article will give a brief introduction to behavior trees [1] and genetic programming [4], and then illustrate an interesting approach to generating behavior trees by using a hybrid algorithm of greedy search and genetic programming [1, 2].

Images are taken from [1] unless otherwise stated.

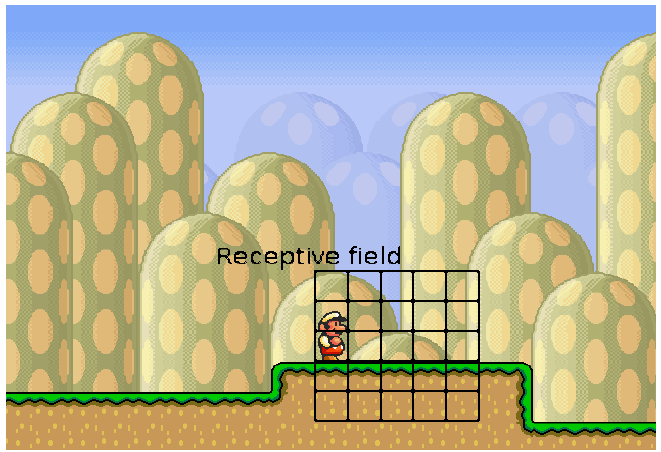

Super Mario Benchmark

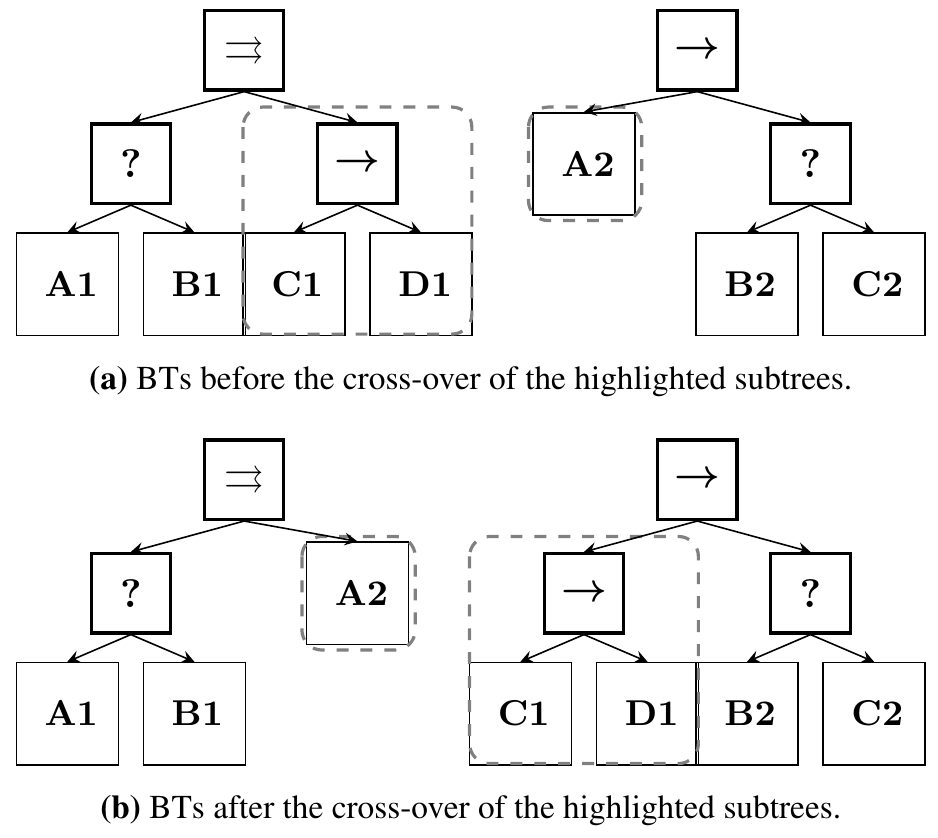

The Mario AI benchmark is an open-source clone of Nintendo’s Super Mario Bros that can be used to test and evaluate AI agents. Task is to move the controlled character (Mario) in a 2d platformer game with the goal to reach the end of the level (rightmost point). Mario needs to jump the gaps, jump over obstacles and avoid or kill enemies by jumping on their head or shooting at them if possible.

Actions that Mario can take are:

- Walk Left

- Walk Right

- Jump

- Croush

- Shoot

Mario has a receptive field that the environment returns with each cell having a binary information about is there an enemy in it and is there an obstacle in it.

Environment also gives information if Mario can jump and shoot. Overall this gives a total of 52 conditions that are used to define a state of the game. Environment allows to change the seed for level generation, so multiple levels of the same complexity (in terms of enemies, gaps…) can be generated.

Code of this environment is available here. This environment is used in the GP-BT algorithm that this article will cover in the later part of this text.

Behavior trees

Behavior tree is a structure that can be used to define the switching of different tasks (actions). It originates from the field of robotics where it is mainly used to define behavior of a robot.

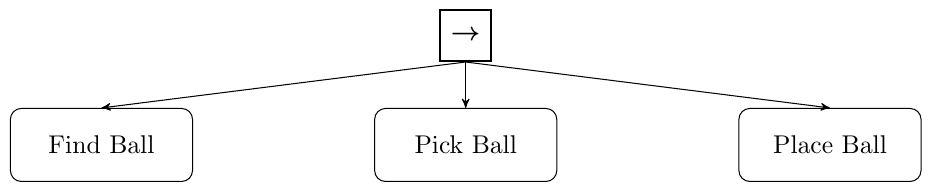

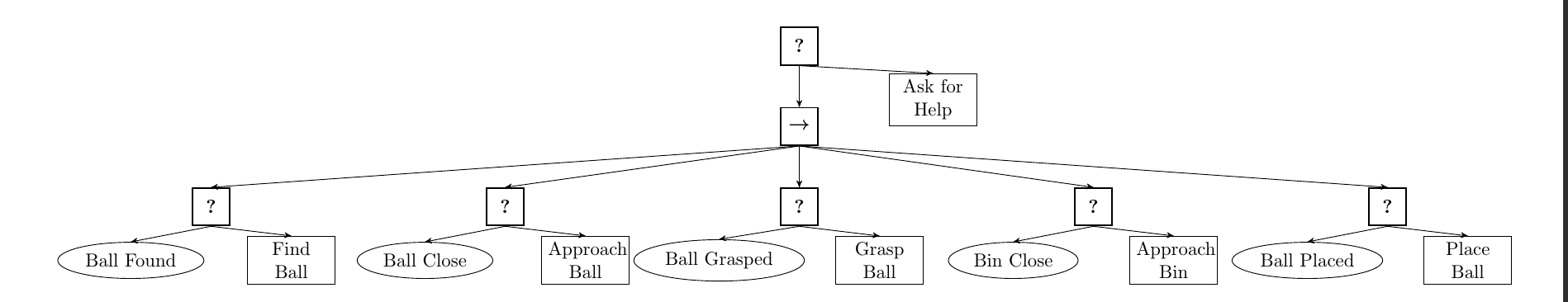

Behavior trees allow us to create systems that are modular and reactive. The following image shows a simple behavior tree. This behavior tree defines a sequence of actions in which the agent will try to find a ball, then try to pick it up, and finally, to place the ball somewhere.

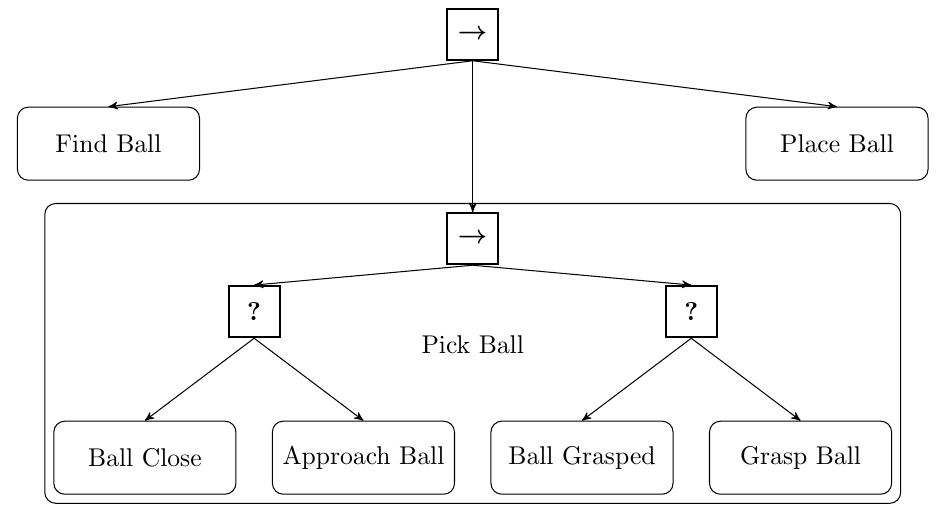

But the same behavior tree can be defined in a more detailed way. This illustrates the modularity and flexibility of behavior trees nicely. Instead of simply saying Pick Ball, it can be further defined on what it means to pick a ball. We can say that the agent first needs to check if the ball is close. If it is, then it will check if it is grasped (the previous part of the sequence node asserts that the ball is found), and if not, grasp it.

Behavior trees have became very popular in the gaming industry where they are mainly used to define behaviors of NPCs (non playable characters). Requirements of NPC characters are not necessarily beating the best human player but rather on playing their own small part of the game world or by giving a challenge to the player, but still being beatable. The following video contains the talk from GDC about behavior trees in the game The Divison.

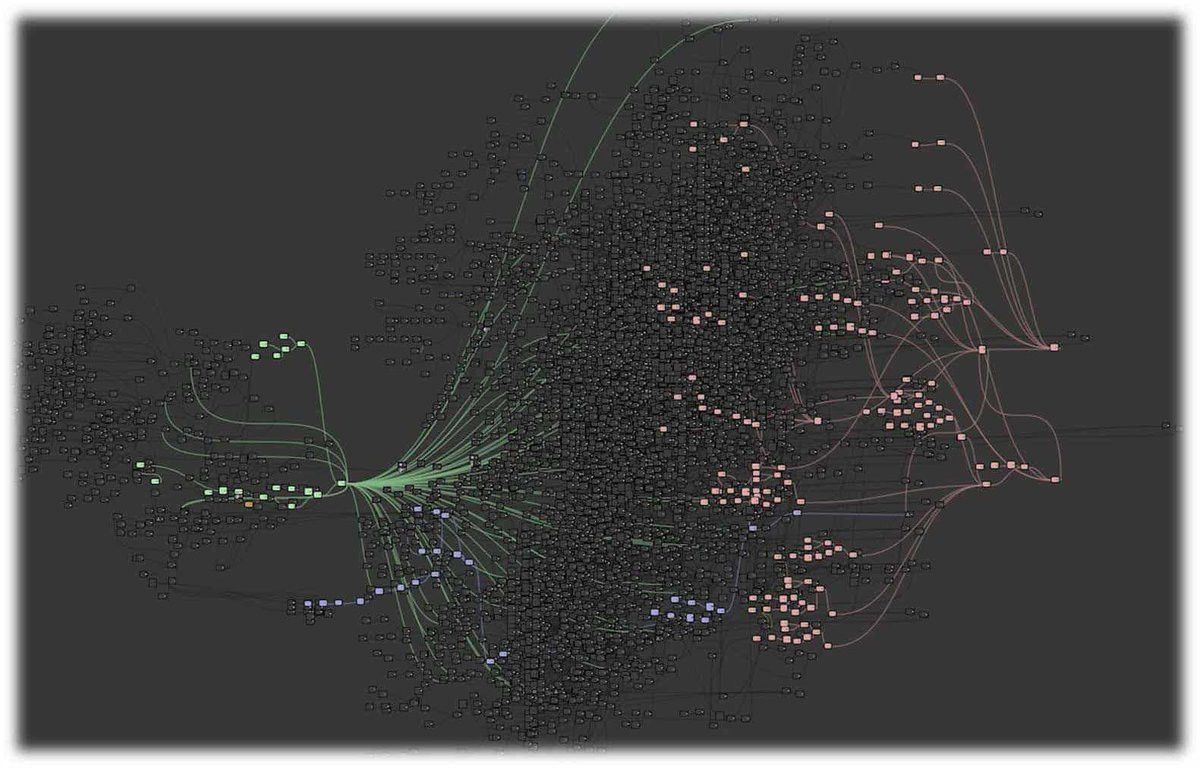

Here is an image from the video which illustrates the level of complexity of the behavior trees that are used inside of the game.

Basic BT nodes

Behavior tree (BT) is a rooted directed tree with different types of nodes. Node can be:

- Control flow node

- Execution node

Control flow nodes are:

- Fallback (decision) node

- Sequence node

- Parallel node

- Decorator node

Execution nodes are:

- Action node

- Condition node

- Decorator node

Node is executed when it receives a tick, and BT is ticked on some frequency. When BTs execute, they propagate signals which can be of type:

- Running

- Success

- Failure

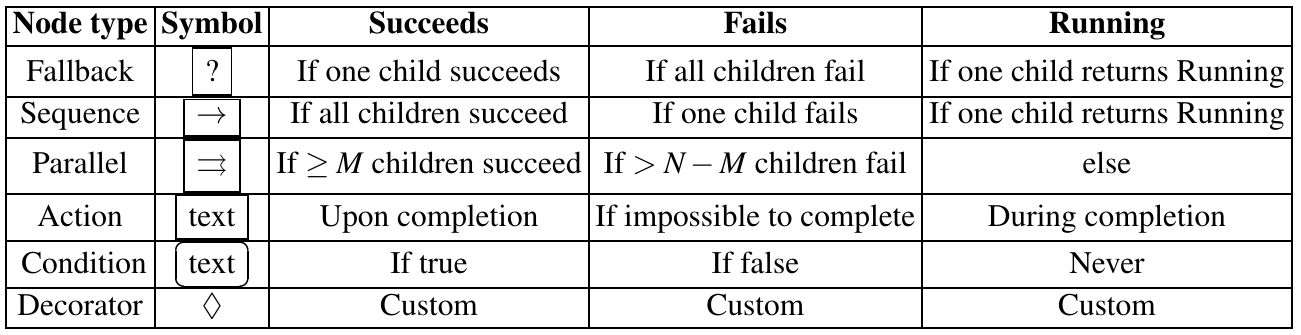

Control flow nodes

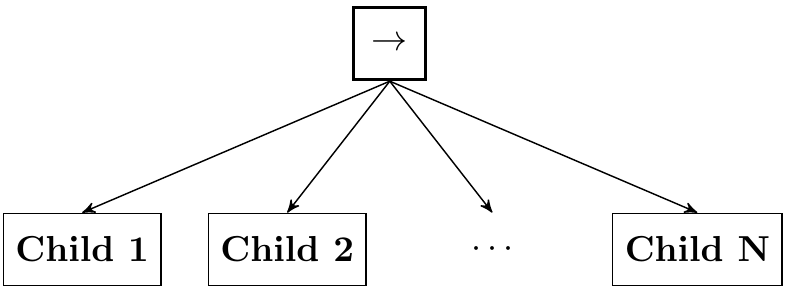

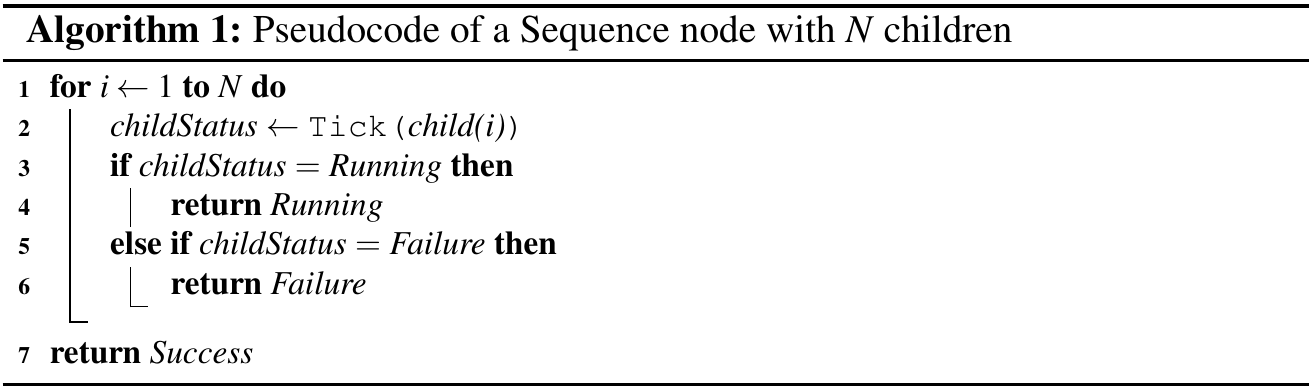

The sequence node is used to chain execution of children. It routes the ticks to its children from the left until it finds a child that returns either Failure or Running, then it returns and forwards that signal. It returns Success if and only if all its children return Success.

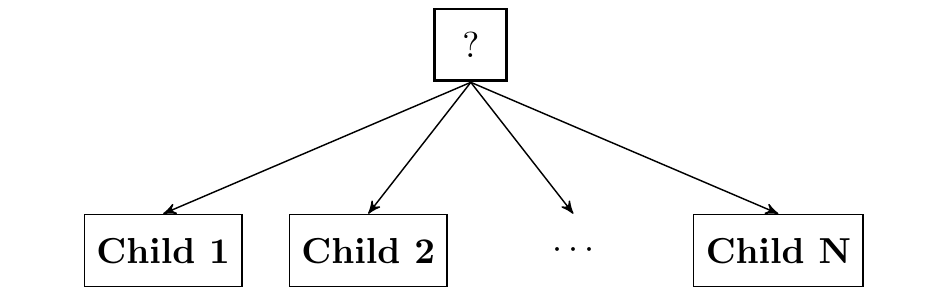

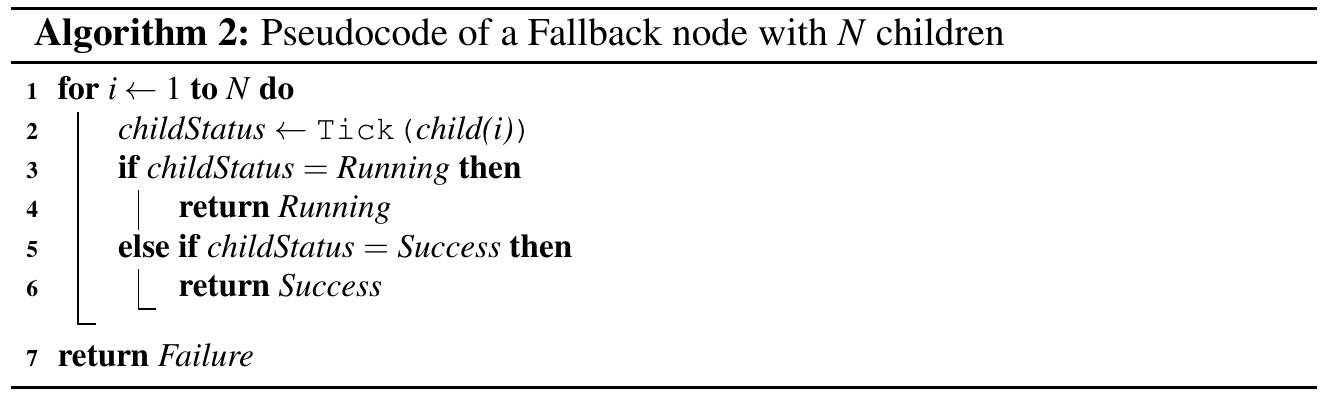

The fallback node is used to choose an action (similar to if-elseif-...-elseif branching). It routes the ticks to its children from the left until it finds a child that returns either Success or Running, then it returns and forwards that signal. It returns Failure if and only if all its children return Failure.

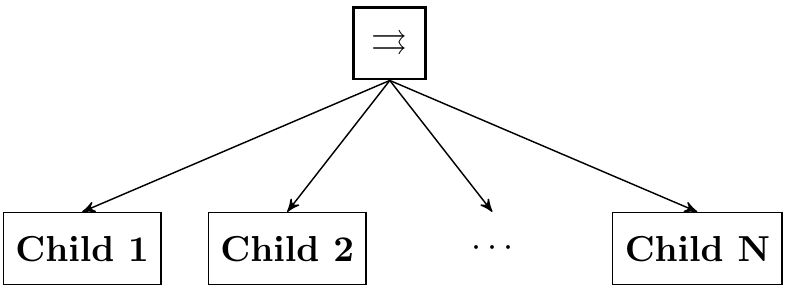

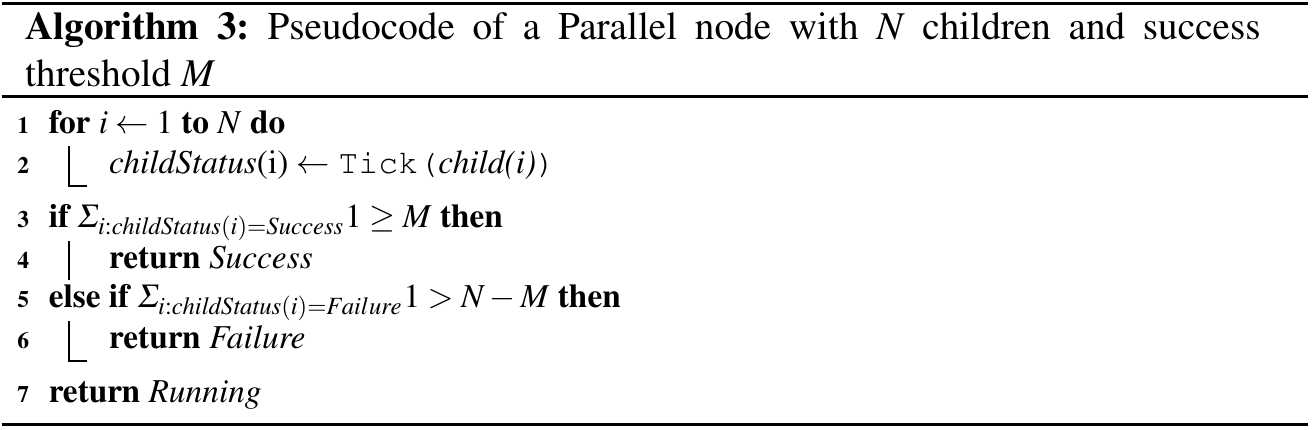

The parallel node is used to perform parallel execution. It returns Success if more then M children return Success, Failure if more then N-M children return Failure and Running otherwise.

Execution nodes

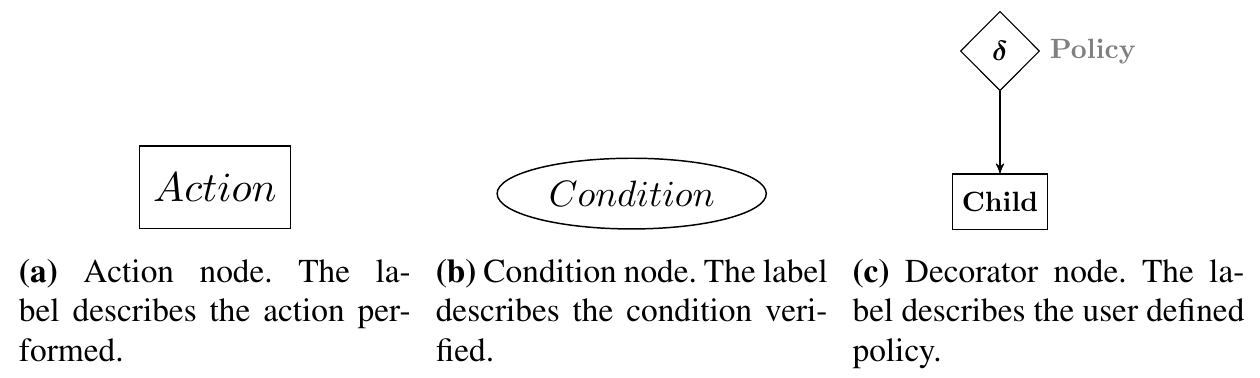

Action nodes are nodes that represent actions that an agent can execute. Typically these are functions and behaviors defined by the developer and are environment dependent.

Condition nodes are used to evaluate a predicate - is enemy in front, is health > 0 and similar.

Decorators are nodes that allow to define additional logic, for example to negate the predicate.

An example - Robot with the ball

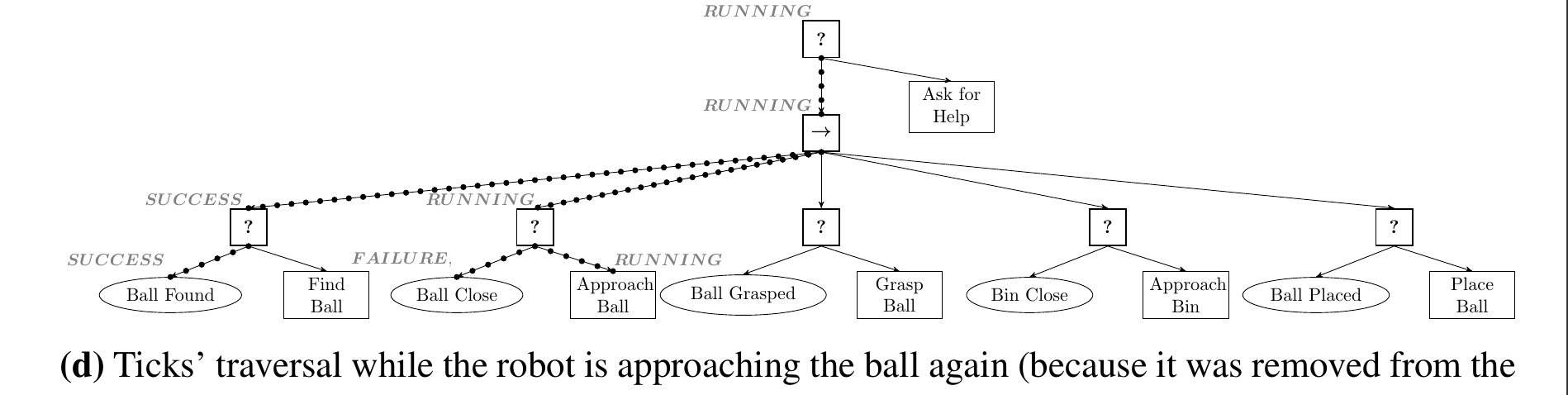

This example will illustrate the execution of the BT, including showing reactivity when another (external) agent takes the ball from our robot, forcing our robot to look for the ball again. The problem is to have a robot search for a ball, approach it, grasp it, go to the bin and place the ball inside.

Let's assume the following behavior tree is defined.

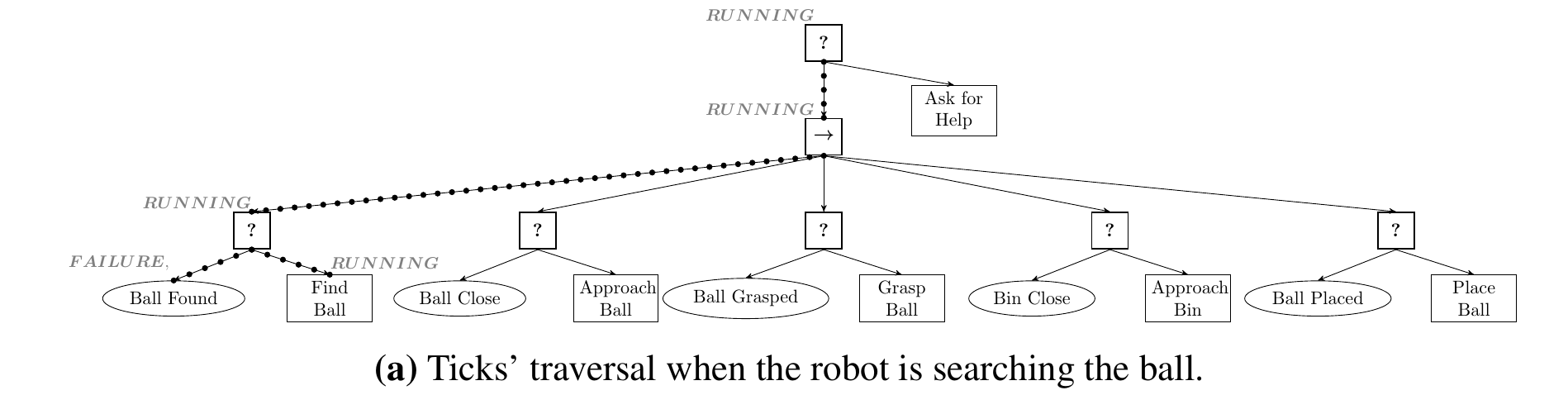

When the execution starts, BT is ticked and it reaches condition node Ball Found. Agent doesn’t know the ball position, hence the result is Failure, so Fallback node

ticks Find Ball action. This returns Running (and sends it upwards) as it was just started by BT. This is illustrated on the following image:

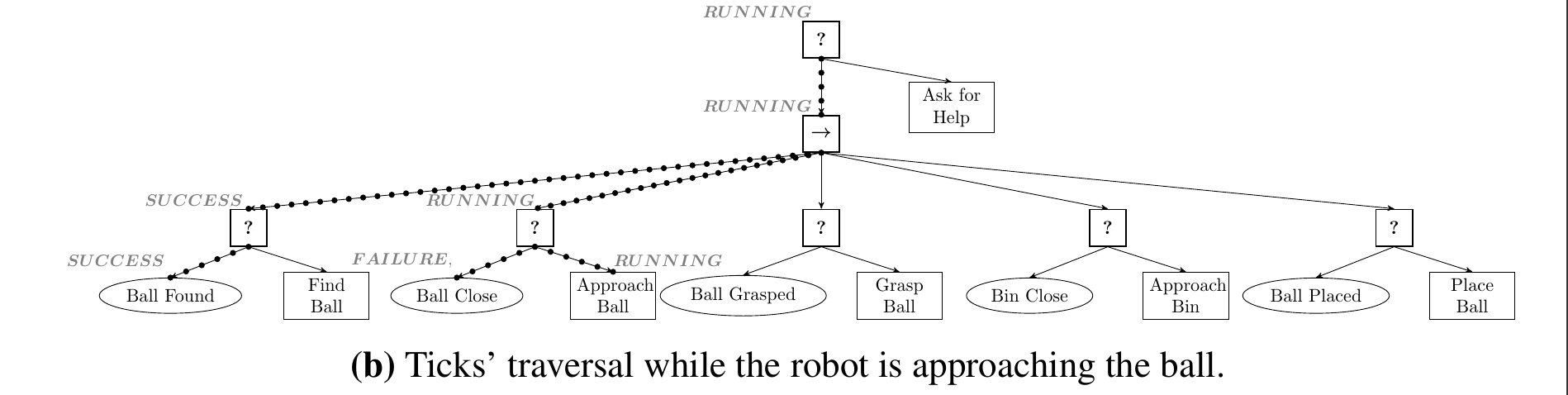

While executing Find Ball, agent sees the ball with the camera, so it learns the

ball position! So now Ball Found returns Success which gets propagated upwards the Sequence Node. BT keeps ticking its children, (assume that) Ball Close returns Failure. Then BT executes an action Approach Ball which returns Running.

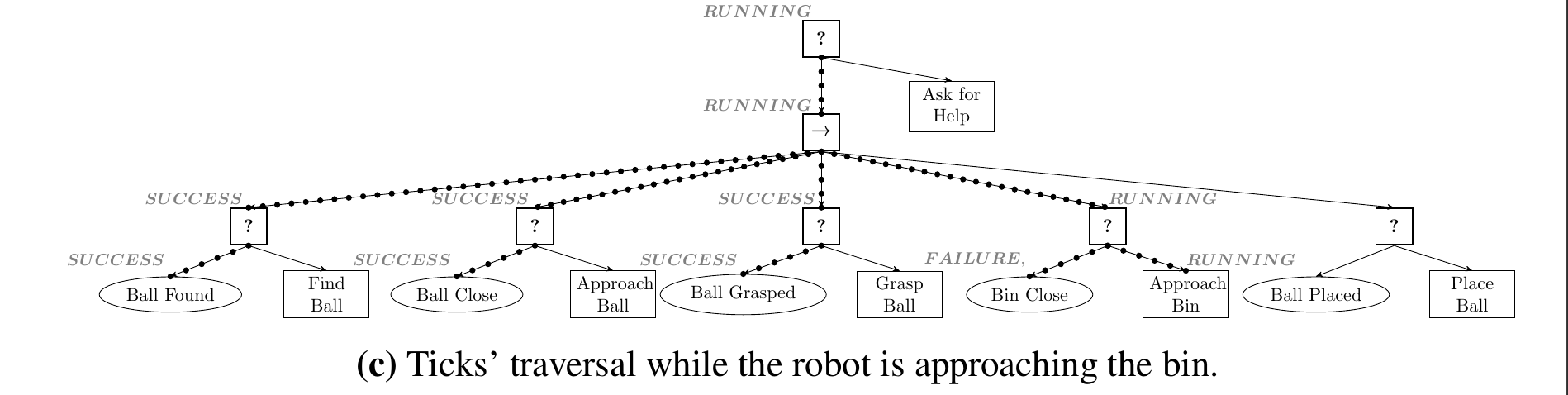

Eventually the agent reaches the ball, picks it up and goes towards the bin.

But, a plot twist, an external evil agent has appeared! He has just taken the ball from our poor little robot and placed it in front of it. So with the ball in his sight, our robot now starts approaching the ball again! We can see how reactive a BT to a sudden (unexpected) change in the environment.

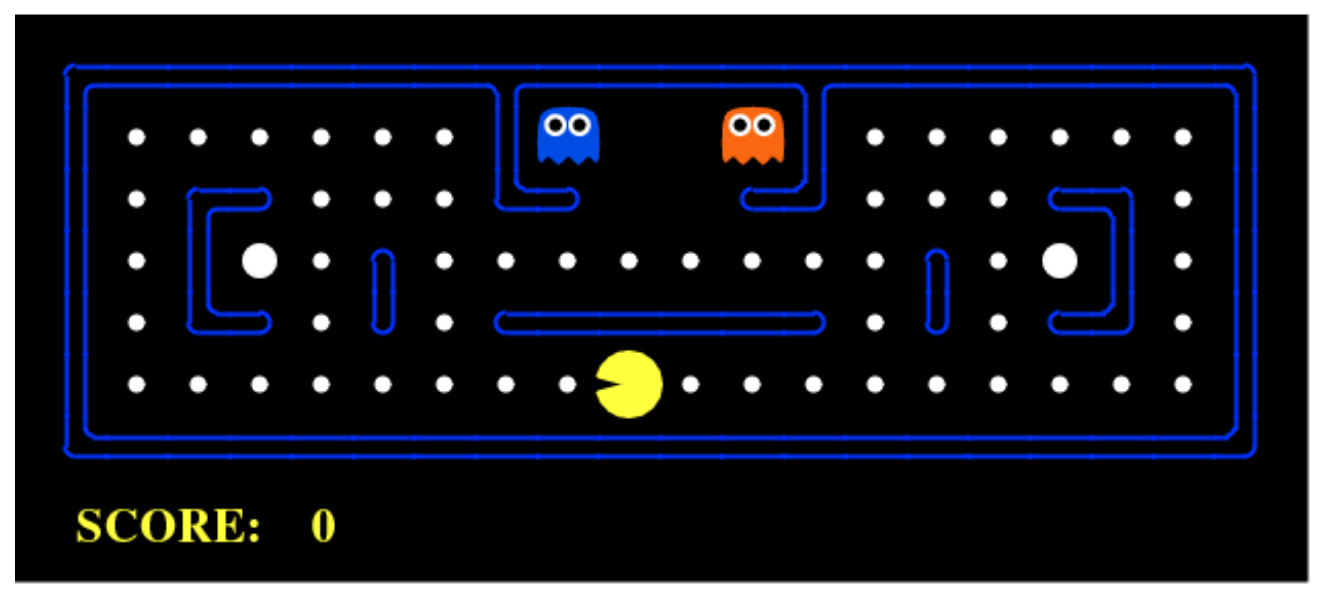

Elegance and systematic approach that behavior trees possess can also be shown for a BT example for the game of Pacman.

Basic agent can be defined in the following manner:

Genetic programming

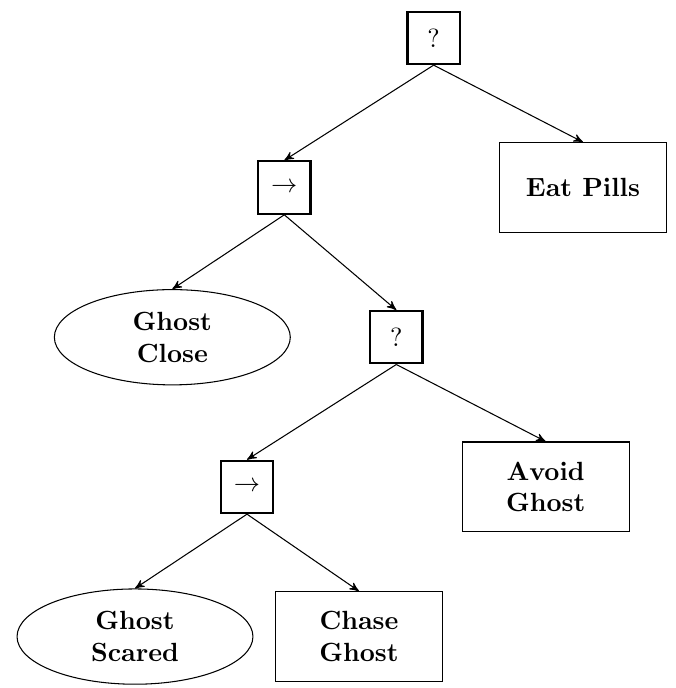

Genetic Programming (GP) is a type of Evolutionary Algorithm that takes an inspiration from biological evolution. It evolves a set of policies (defined as trees) until one of them is good enough for the problem that is being solved. A set of these individuals is called a generation. On each iteration of GP, a new generation is created from the previous one by using genetic operators of selection, crossover and mutation. Fitness function is a function that is used to evaluate the quality of an individual solution - for example, how high of a score has pacman achieved when it played the game.

Crossover is a genetic operator used to create new individuals from existing ones. Goal is to have new individuals be better (on average) than existing ones. Better often means having a higher value when evaluated using a fitness function. Selection is an algorithm used to select individuals to form a next generation. Mutation is used to introduce slight randomness in the algorithm in order to reduce the probability of algorithm converging to a local extrema. It is executed on an individual.

These operators can be applied on behavior tree nodes without major problems.

Genetic programming - Behavior Tree approach

Idea is to combine a greedy algorithm with genetic programming in order to iteratively generate a behavior tree that will manage to solve the problem. For example, create a BT that will allow an agent to reach the end of the Super Mario level. Greedy algorithm are used in order to speed up the optimization process as using only GP can be too slow, as shown by the authors [1, 2].

At the beginning, a simple BT is created like in the following image.

Safe Subtree is a user defined tree that allows us to forbid some states and actions that we know are bad or more importantly, wish to forbid completely. The Learn subtree is the tree to be learned by the algorithm.

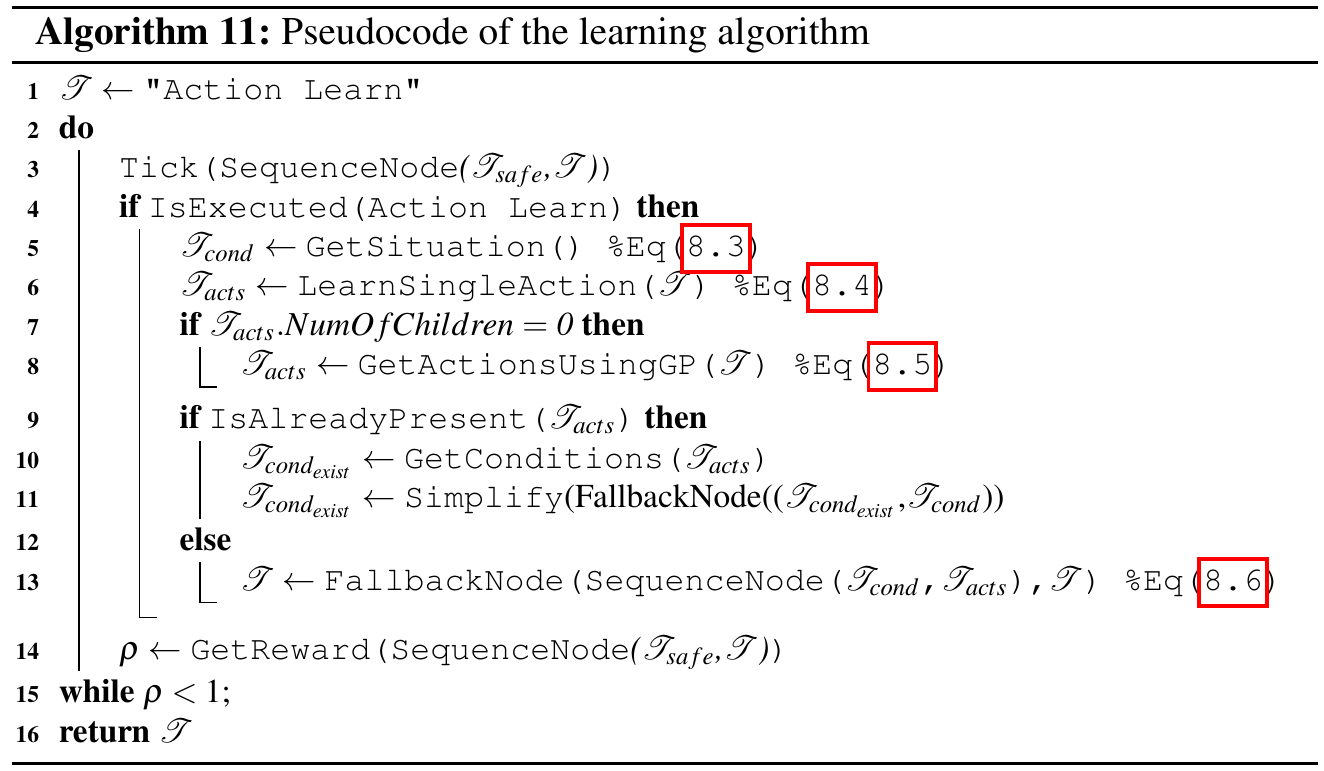

Line 3 is used to execute a tick to the BT and check if Safe Subtree was executed. If it was, we move on to the next iteration, if not, then learning can be initiated (which is checked by line 4).

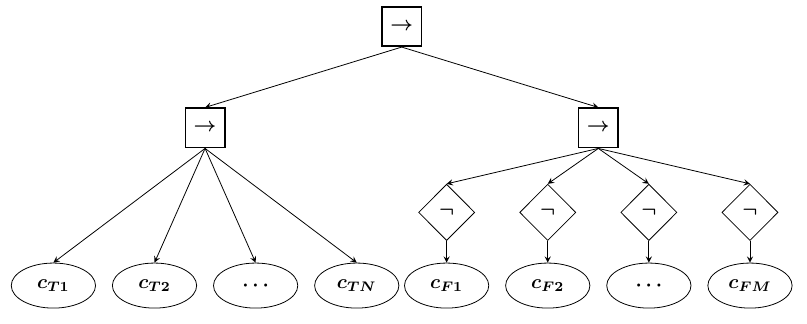

Line 5 creates a BT that represents the state of the agent in the environment. In the case of Super Mario, it would return a tree with 52 conditions and their values.

Line 6 performs a greedy algorithm to try and find an action that will improve the reward. It tries only one action at a time (not their compositions). If it fails to find it, then GP algorithm search is executed on line 8.

GP algorithm starts with 4 random BTs composed of one random control flow node and 2 random leaf nodes. Number of individuals in a generation is set to 25, and the GP algorithm is stopped when it finds a solution that improves the reward. After lines 6 (and 7 and 8), an action tree is created.

If such an action tree already exists in the original tree, then we try to find conditions that were added for the agent as an indicator on when to execute a particular action, and then try to combine the old conditions with new ones that both share a same action (line 9, 10 and 11).

If not, then a new tree is created and it gets added to the current solution tree (line 13).

Finally, a reward is calculated for the current solution that the algorithm has found (line 14).

Different scenarios for evaluation

There were 5 scenarios that were created for evaluation in [1]:

- Scenario 1 - no enemies and no gaps

- Scenario 2 - no enemies but gaps are present

- Scenario 3 - enemies present and gaps present

- Scenario 4 and 5 - similar to 3, but grow in difficulty

There is also a tree simplification step that is executed after the algorithm finishes. Simplification performs a BFS search and gives all nodes a BFS ordering. Then it iterates an all subtrees according to this ordering and checks what happens with the reward function when these subtrees (one by one) get removed from the tree.

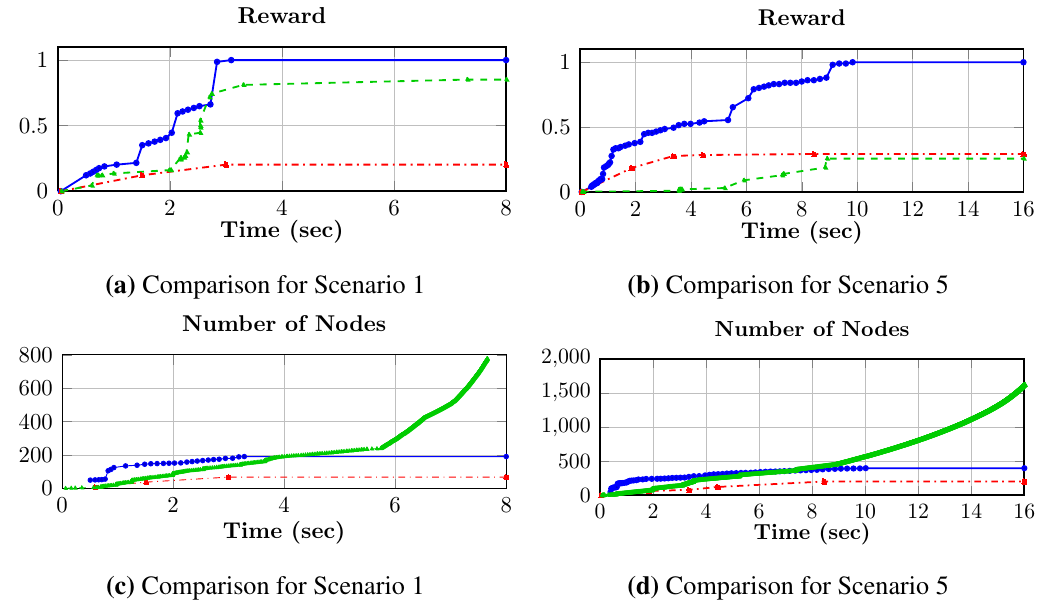

Three algorithms were compared. GP-BT (illustrated above), pure GP approach and Finite State Machine algorithm from [3]. Results are shown in the following image.

We can notice that GP-BT outperforms both algorithms on the easiest and most difficult scenario, and authors report that it was similar to other scenarios also.

Finally, here is a video of an agent developed by GP-BT approached in action in the Super Mario Benchmark.

Use case

Behavior trees are mostly used to define NPC behavior and are probably the goto option when controllable, interpretable and editable NPC behaviors are required. It is also not too difficult for game designers to understand what and when will AI do. Great bonus is game designers being able to define and edit behavior trees through a specialized GUI tool.

Paper gives a hybrid of greedy and genetic programming algorithms that can be used to generate behavior trees automatically if a fitness function is available. The output is a interpretable behavior tree of an agent that can be further modified, updated and learned from.

Resources

- [1] Learning of Behavior Trees for Autonomous Agents, Michele Colledanchise, Ramviyas Parasuraman, and Petter Ogr

- [2] Behavior Trees in Robotics and AI, Michele Colledanchise and Petter Ogren

- [3] An integrated approach of learning, planning, and execution, Ramon Garcia-Martinez and Daniel Borrajo. Journal of Intelligent and Robotic Systems, 29(1):47–78, 2000

- [4] Genetic Programming Theory and Practice, Rick Riolo, W.P. Worzel, Mark Kotanchek, Arthur Kordon